table of contents

- The Fastest Way to Lose Trust Is to Build with AI and No Security

- What Went Wrong? Everything.

- What Is Vibe Coding

- Why AI-Created Code Can Be a Risk Multiplier

- Fast Is Fine. Reckless Is Not.

- So What Should Responsible Builders Do?

- 1. Assume the AI Is Wrong Until Proven Otherwise

- 2. Add Guardrails Before You Go Live

- 3. Run a Red Team Test Before You Hit Publish

- 4. Bring in a Security Partner Who Knows AI

- How PsyberEdge Can Help

The Fastest Way to Lose Trust Is to Build with AI and No Security

Just because you can build an app in a weekend with AI doesn’t mean you should. Especially not if it handles private messages, location data, selfies, and government IDs.

That’s exactly what happened with the Tea App—an AI-coded social tool that promised safety but delivered catastrophe. Billed as a secure space for women to vet and warn each other about dangerous men, the app was positioned as both progressive and protective.

But under the hood, there was no security. No encryption. No password protection. No firewalls. Just a cloud bucket wide open to the internet, and a viral app built on vibes.

What Went Wrong? Everything.

Let’s break it down:

- The code was mostly AI-generated

- No experienced engineering review

- Firebase was left public by default

- Selfies and drivers licenses were never deleted

- No red team tested for data exposure

- No blue team monitored for misuse

- The app went viral with zero infrastructure readiness

Within 24 hours, bad actors found the open storage bucket. What they uncovered was over 59 gigabytes of user data: selfies, messages, IDs, location info—all in plain text. The kind of data that makes identity theft and doxxing child’s play.

What started as a feel-good app became a worst-case security breach, and the internet turned it into a spectacle.

What Is Vibe Coding

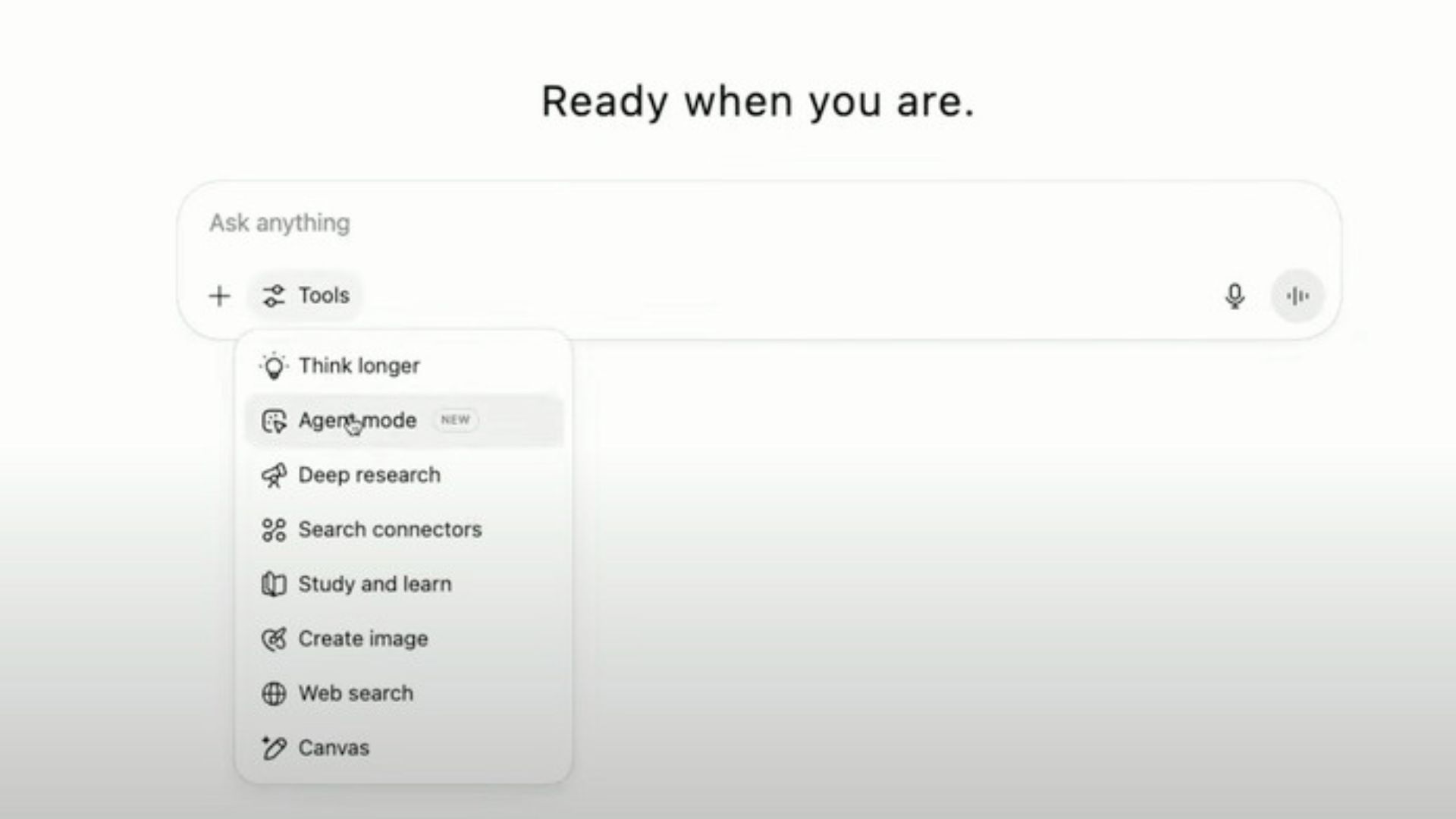

Vibe coding is a growing trend where apps are shipped fast using AI tools without much thought for architecture, security, or scale. It’s development by mood board.

The appeal is obvious:

- Spin up an app in days

- Use AI tools like Replit, GPT, or low-code platforms

- Skip expensive dev teams

- Focus on the story and the launch

But here’s the problem: security doesn’t care about your story. It cares about how your code behaves in the wild. And when you skip the fundamentals, the breach is just a matter of when, not if.

Why AI-Created Code Can Be a Risk Multiplier

AI is great at generating code, but it does not understand security posture. It doesn’t intuit what sensitive data is or where encryption is needed unless explicitly prompted. It won’t stop you from storing passport photos in plain text if you don’t ask it to encrypt them.

Even worse, LLMs will happily hallucinate solutions or offer insecure defaults if you’re not specific. They don’t know that “public by default” in Firebase is a loaded gun. They don’t know that “store images in S3” without access control is a breach waiting to happen.

And most vibe coders? They’re moving too fast to even notice.

Fast Is Fine. Reckless Is Not.

Yes, the new AI tools are powerful. You can build real apps in a fraction of the time it used to take. But the speed is a trap.

Because it feels like progress.

Because the demo works.

Because the users are downloading.

Because your investor just liked the LinkedIn post.

But when you skip security for speed, you’re not launching a product—you’re lighting a fuse.

So What Should Responsible Builders Do?

Great question. Here’s what smart teams are doing right now:

1. Assume the AI Is Wrong Until Proven Otherwise

Treat AI like a fast intern. It can help, but every single thing it does needs to be checked by someone who understands the bigger picture.

2. Add Guardrails Before You Go Live

Just because you can deploy doesn’t mean you should. Run security tests. Encrypt data at rest. Use access control. Add logging and alerts. Protect your storage buckets.

3. Run a Red Team Test Before You Hit Publish

Let someone try to break what you built. Red team exercises simulate real-world attacks and show you where you’re vulnerable.

4. Bring in a Security Partner Who Knows AI

Security for traditional stacks is not the same as security for LLM-powered or AI-generated apps. You need a team that knows how to test prompts, validate AI output, and audit auto-generated backend logic.

How PsyberEdge Can Help

We built PsyberEdge for moments like this—when innovation outpaces good judgment. Our team blends offensive and defensive cyber experience with real world AI implementation.

We help clients:

- Audit AI-generated code for security gaps

- Harden Firebase, S3, and similar cloud storage before deployment

- Run red and blue team simulations to prepare for real-world attacks

- Build internal AI guardrails that catch risks before they escalate

- Secure data flows across chatbots, web apps, and mobile platforms

We’ve seen what happens when apps go viral and security is an afterthought. We’ve helped teams clean up the mess. We’d rather help you prevent it altogether.

The Bigger Picture: AI Is Not the Problem. Bad Process Is.

AI doesn’t write insecure apps. People do—by trusting it blindly, skipping validation, and prioritizing vibes over verification.

The Tea App breach is a warning. Not just for developers. For founders. For investors. For everyone racing toward the AI future without a seatbelt.

So if you’re building fast, good for you.

Now build smart.

Book a Strategy Session with PsyberEdge

We’ll review your app, your tech stack, and your AI integrations. We’ll test your assumptions and help you close the gaps. Before your users find them for you.

Move fast. Protect what matters. Build with PsyberEdge.